Fitness Furniture

UX Research

Overview

A generative system using p5.js, LLMs, and parsed policy + heritage archive data to visualize the tension between lived and legislated definitions of culture through Tanzanian Kitenge prints.

Timeline

Ongoing (Prototype completed)

My Role

Creative Technologist & Critical Systems Designer

Tools

p5.js, HTML/CSS, JavaScript, OpenCV, Python, MongoDB, Firebase, Large Language Models (LLMs), Node.js

Teammates

AI/ML Engineers ②

Data Visualization Specialist ①, Cultural Anthropologists ②

Policy Analysts ②

WHAT IS A KITENGE?

Critical analysis of culture

INSPIRATION

THE BIG IDEA

MID-FIDELITY PROTOTYPE

Building the system

REFLECTIONS AND NEXT STEPS

1. "Culture" is used vaguely in Tanzanian policies, often to serve political agendas.

2. It's used to justify exclusionary laws on land, gender, and education.

3. Policy definitions of culture differ sharply from lived experiences.

4. Many accept policies as cultural truth due to institutional authority.

5. Heritage archives show a richer, more nuanced view of culture.

6. This gap reinforces marginalization and limits resource access.

Critical analysis of culture

INSPIRATION

THE BIG IDEA

MID-FIDELITY PROTOTYPE

Building the system

REFLECTIONS AND NEXT STEPS

1. Fixed black boundaries represent rigid cultural narratives used in lawmaking.

2. Enclosed patterns and colors shift based on user-selected responses to cultural prompts, symbolizing how lived culture pushes against imposed definitions.

3. Each wall may represent a specific policy theme (e.g., land, gender, governance), turning the space into a layered, explorable data tapestry.

MID-FIDELITY PROTOTYPE

*Please interact with the screen below:

Critical analysis of culture

INSPIRATION

THE BIG IDEA

MID-FIDELITY PROTOTYPE

Building the system

REFLECTIONS AND NEXT STEPS

Color Picker Input→ Represents participant definitions of culture through color.

Pattern Changes→ Simulates how lived culture disrupts static government narratives.

Black Boundaries→ Symbolize fixed, institutional definitions of culture from policy.

Visual Evolution→ Shows how collective input gradually reshapes the cultural narrative.

Theme Mapping→ Each cultural concept (e.g. tradition, governance) links to a specific print zone.

Critical analysis of culture

INSPIRATION

THE BIG IDEA

MID-FIDELITY PROTOTYPE

Building the system

REFLECTIONS AND NEXT STEPS

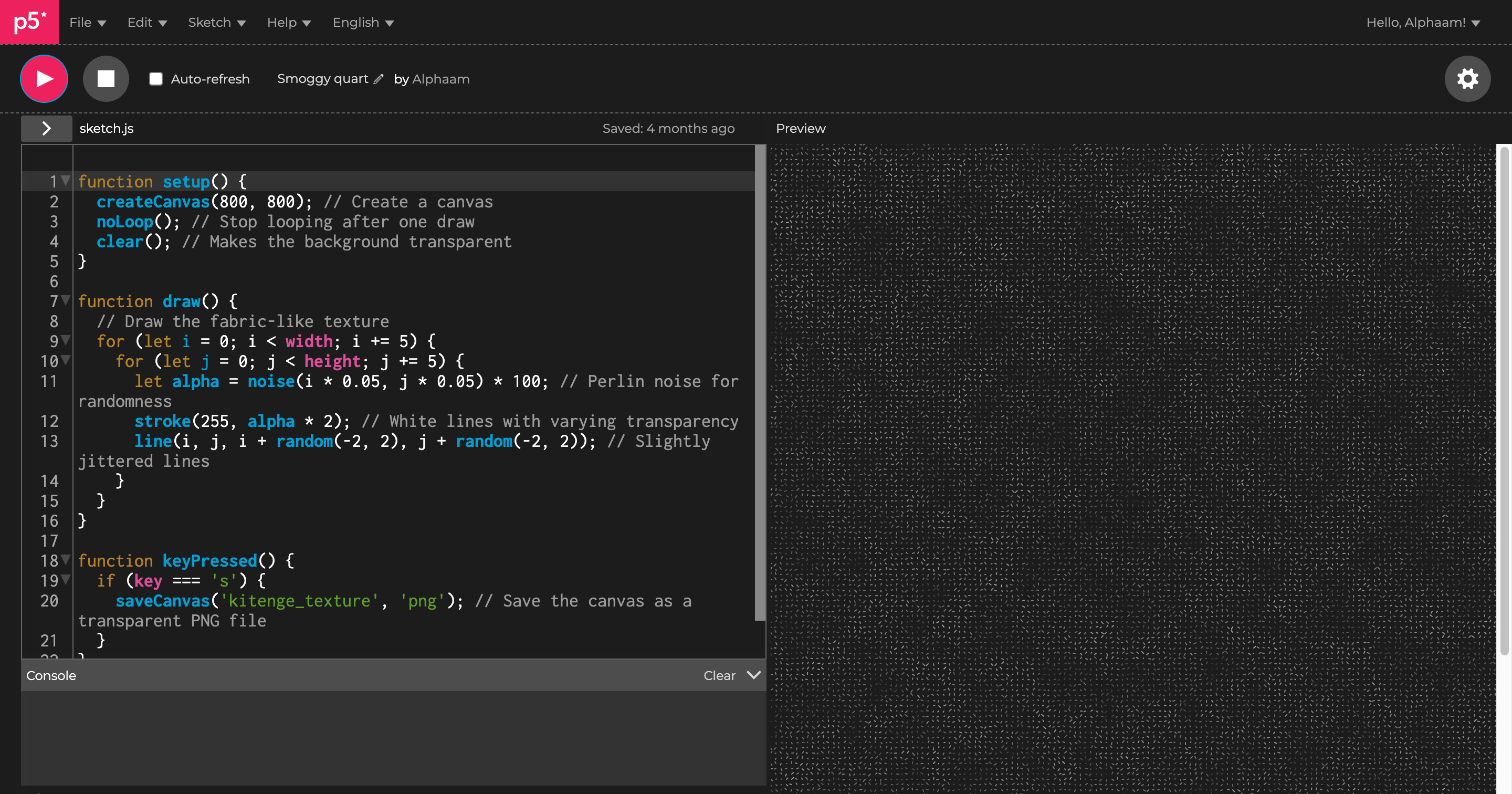

RECREATING THE KITENGE PRINT

Here are my experiments:

SHAPE STUDY

COLOR STUDY

PATTERN STUDY

WORKING ON INSTALLATION

Critical analysis of culture

INSPIRATION

THE BIG IDEA

MID-FIDELITY PROTOTYPE

Building the system

REFLECTIONS AND NEXT STEPS

A key challenge I’ve grappled with is the ethics of using existing Kitenge prints as training material—extracting shapes and color patterns using Python to learn how they are constructed. While this was critical for understanding how to build a generative system, I remain conscious that these prints carry cultural and commercial value, and were created by designers and communities whose work should be credited and respected.

Going forward, I aim to use this foundational understanding not to copy but to create entirely new pattern systems inspired by the cultural logic of Kitenge, rather than the visual specifics of existing ones.

Technically, I’m still learning how to:

1. Use TouchDesigner to implement real-time morphing and pattern transitions on spatial surfaces (like walls).

2. Create patterns that respond dynamically to inputs over time, maintaining generative fluidity while preserving design integrity.

3. Balance visual complexity with performance across large projection surfaces.

Now that I’ve built a working pipeline and understand how to deconstruct and reconstruct Kitenge elements, my next steps are:

1. Design original generative Kitenge-style patterns from scratch.

2. Build a system that allows them to morph in real time in response to user interaction.

3. Expand from screen-based prototypes into a room-scale installation, where the walls will become responsive textiles.

This process has solidified my belief that code can be a form of cultural authorship—and that design systems can critique the systems that design us.

Overview

Investigating the opportunity areas for fitness furniture as a gym alternative in Berkeley, California.

Timeline

Ongoing (Prototype completed)

My Role

UX Researcher

Tools

FigJam, Airtable, NVivo, Dovetail, Statista, Mintel, Google Scholar, Notion.

Teammates (left to right)

Hongxi Pan

Sharon Zhao

Darlene Chen

THE DREAM TEAM!

Overview

A scooter add-on that disperses seeds while you move—bringing greenery to urban spaces and beyond.

Timeline

Ongoing (Prototype completed)

My Role

Product Designer & Fabrication Engineer

Tools

Autodesk Fusion 360, Arduino IDE, Figma, Ultimaker 3D Printer, Adafruit Feather M0 WiFi, P

Teammates

Elizabeth Sun

Sasha Suggs

Context

In this project, I explored how personal transportation can actively give back to the environment. Merging my fascination with sustainable tech and creative problem-solving, I co-designed a seed-dispersing device for scooters - planting seeds and transforming routine journeys into green adventures!

DEMO

Key Objectives

Research & Ideation

Process & Prototyping

Mobile App & Gamification

Final Design

REFLECTIONS AND NEXT STEPS

1. Reduce carbon emissions with a more sustainable personal mobility device.

2. Actively contribute to green spaces through seed dispersal.

3. Encourage positive behavior change via data and gamification.

Key Objectives

Research & Ideation

Process & Prototyping

Mobile App & Gamification

Final Design

REFLECTIONS AND NEXT STEPS

“How can we make a scooter that benefits the environment while in motion?” This led to brainstorming features such as embedded seed capsules, fan-driven dispersion, and data tracking to measure impact.

Key Questions Explored:

1. How can we make a skateboard or scooter that actively benefits the environment?

2. What materials ensure sustainability without compromising functionality?

3. How can users track or visualize their positive impact in real time?

Key Objectives

Research & Ideation

Process & Prototyping

Mobile App & Gamification

Final Design

REFLECTIONS AND NEXT STEPS

Cardboard Prototyping

3D Printing & Fabrication

%20(1).png)

Electronics & Real-Time Data Tracking

Real-Time Data Tracking Setup:

1. Connect the Adafruit Feather M0 WiFi to a breadboard for power and control.

2. Mount a push-button on the breadboard: connect one terminal to GND and the other to a digital pin.

3. Using Arduino IDE with the WiFi101 and Adafruit MQTT libraries, write and upload code that starts/stops a timer and logs the seed dispersal event on Adafruit IO Cloud.

4. Watch as each button press creates a real-time data entry, confirming the action.

%20(4).png)

%20(5).png)

Key Objectives

Research & Ideation

Process & Prototyping

Mobile App & Gamification

Final Design

REFLECTIONS AND NEXT STEPS

FINAL DESIGN

STEP 1

Step 2

Step 3

STEP 4

REFLECTIONS AND NEXT STEPS

Next Steps:

Data Tracking Enhancement: Integrate GPS heatmaps and additional IoT sensors to improve the measurement and visualization of seed dispersion.

App Refinement: Upgrade the Figma prototype by incorporating machine learning algorithms for more accurate route suggestions and better gamification features.

Expand Collaborations: Initiate discussions with local government and sustainability organizations to explore practical applications and broaden the project’s impact.

I'm so happy to have worked with the team that Ididin developing this. Thank you to Elizabeth Sun for your detailed design insights and Sasha Suggs for ensuring precise data tracking and app integration.

WHAT DID I BUILD?

Timeline

Ongoing (Prototype completed)

My Role

Product Designer & Fabrication Engineer

Tools

Autodesk Fusion 360, Arduino IDE, Figma, Ultimaker 3D Printer, Adafruit Feather M0 WiFi, P

Teammates

Elizabeth Sun

Sasha Suggs

Why did I BUILD IT?

1. Inspired by Don Norman’s The Design of Everyday Things and its critique that modern interfaces engage less with our bodies, I wanted to create more embodied interfaces that involve physical movement.

2. Reflecting on language accessibility, I realized that people who cannot communicate verbally often have to learn alternative languages (like ASL or fingerspelling). These methods can be limiting, as they require the audience to understand the same language.

These reflections led me to my primary question/exploration:

What if language could be whatever the communicator desires, with technology translating it into familiar and accessible outputs?

Breaking this down

So, why a broom?

My Process

1. 1. form - affrodances

1. 2. form - DESIGN

1. Ensuring the size comfortably accommodated all the electronic components without being visually overwhelming.

2. Allowing proper ventilation for these electronics.

Using Autodesk Fusion 360, TinkerCad, and a Prusa 3D printer, I created the enclosure shown on the left using sturdy PLA filament.

2.1. ELECTRONICS

1. MPU-6050 Accelerometer to collect motion data.

2. Arduino UNO R4 WiFi microcontroller to transmit location data to the cloud for processing.

3. The Python and Processing software stacks.

4. Custom location data retreival and processing API.

5. Arduino IDE

5. Supporting power and wiring.

3.1. Data collection

3.2 DEFINING THE MOTION

These grouped patterns were then mapped to specific emotional cues to inform the system's eventual writing. On the right, you can see the evolution of my analysis and fine-tuning process, with each colored line representing a different motion pattern.

3.3 RETRACING MOTION

4.1 . ADDING THE TEXT

4.2 . SOUND

The result is an instrument of music and text-based communication.

FINAL RESULTS, reflections and next steps

This project was not only fun but also an enlightening opportunity to learn about the limitations in communication faced by disabled individuals through participant interviews. I learned that inclusive technology does not isolate its users; rather, it works to provide them with access and presence in the shared spaces around us.

While the project is a successful prototype, I recognize the need for more efficient sensors to handle the real-time data transfers and processing taking place. I also envision this project evolving into a wearable product featuring a more streamlined motion cluster training system and a comprehensive language-to-cluster mapping, which would allow for even more detailed communication.